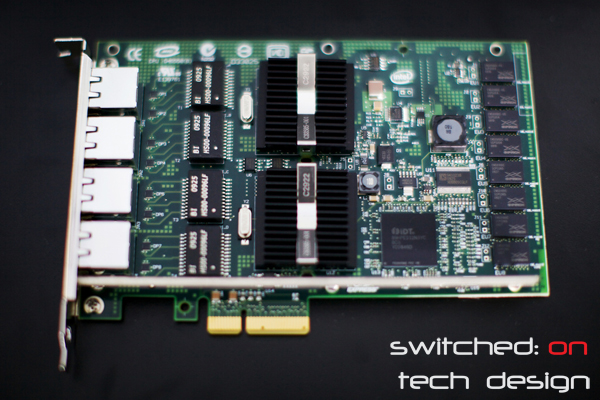

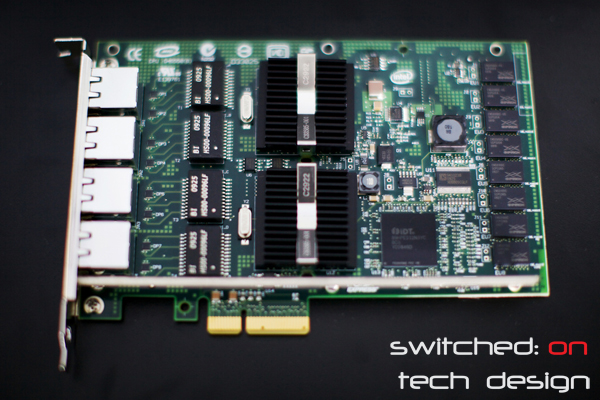

One of the more common quad-port network cards that pops up online is the Pro/1000 PT. This is a 2006 Intel design – discontinued in 2009 – which generally seems to be priced anywhere from AU$120-160 as a server pull. It uses two Intel 82571 chips, each controlling two gigabit ports, and is PCI-E 1.0 x4. The chips are widely supported and work out of the box with most operating systems and hypervisors. The card is also compatible with PCI-E 2.0 and 3.0 lanes, and there are both low-profile and full-height variants.

One of the appealing features these cards have – beyond four gigabit ports, of course – is teaming (aka trunking or bonding, falling under IEEE spec 802.3ad). This allows you to create links between the card and other devices which span more than one gigabit port, allowing you anywhere from a single gigabit connection to using all four to pipe your traffic through. Note that you won’t get 4Gb/s in a single transfer that way – it will be four gigabit pipes rather than one 4Gb pipe. This is particularly useful if the network traffic going into your server is exceeding a single gigabit link and can result in a noticeable improvement in network performance – so long as your switch supports 802.3ad too.

The card’s TDP is 12.1W and the chip heatsinks get reasonably hot to the touch if you don’t keep adequate airflow over the card during operation; they’re cards designed for use in high-airflow server environments so if you’re putting them in a quiet home server you may want to consider how much airflow the cards will get. The controllers can be passed through for hypervisors such as ESXi; keep in mind that you’re passing through one or both controllers rather than the ethernet ports themselves, so you can only pass the ports through in pairs.

This card represents quite good value for the home/SMB user who needs more ports, either to separate network traffic or to alleviate bandwidth congestion. The wide compatibility is also an advantage for those using motherboards without existing Intel network controllers and it should work out of the box with just about any modern OS, including ESXi. At about a third of the cost of one of the new variants (i350-T4) these are definitely a card to consider if you’re looking for an Intel NIC but don’t want to buy new.