With the release of the Fractal Design Define R4 we have been asked a few times about whether Corsair’s self-contained liquid cooling system will fit in the front. Placement here has a few advantages over placing it in the top of the chassis; noise is reduced, for a start, and if you use the top as an intake you lose the inbuild dust filters. You could use the H100 in an exhaust configuration in the top position but temperatures will not be as good as you will be drawing in warm air from inside the chassis to cool the CPU rather than the cooler, outside air.

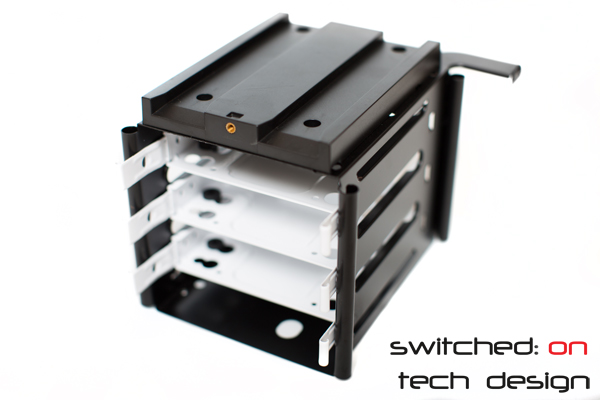

That leaves the front as an ideal position from the perspective of noise and cooling; the Define R3 did not allow this placement without drilling out the front drive bays. Since the R4 allows you to remove the front drive trays without permanently modifying the chassis, how does the H100 fare in terms of fit?

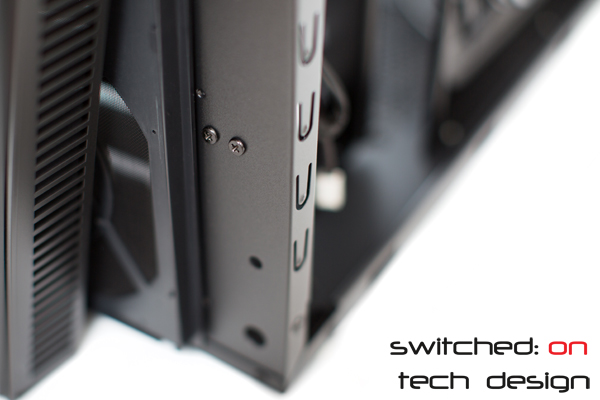

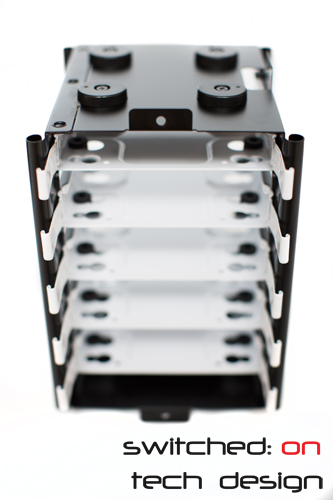

As it turns out, it fits beautifully:

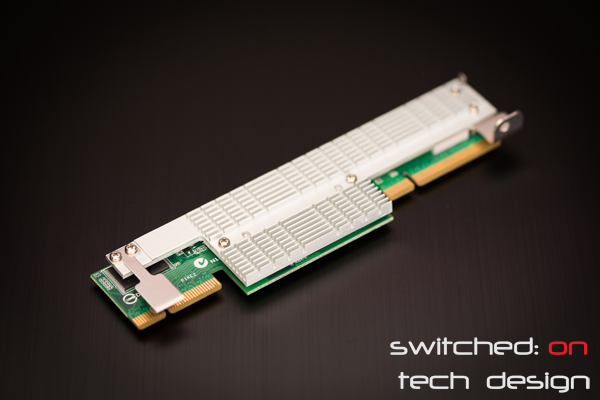

Given that the front has been upgraded to allow the placement of 140mm fans as well as 120mm fans there’s a little bit of space below the cooler; this hasn’t proven to be an issue in our testing though you could easily put a baffle in (foam, tape etc.) if it bothered you. You can see the coolant tube placement here:

There’s a reasonable amount of slack there – it’s definitely not applying an undue amount of pressure on the tubes.

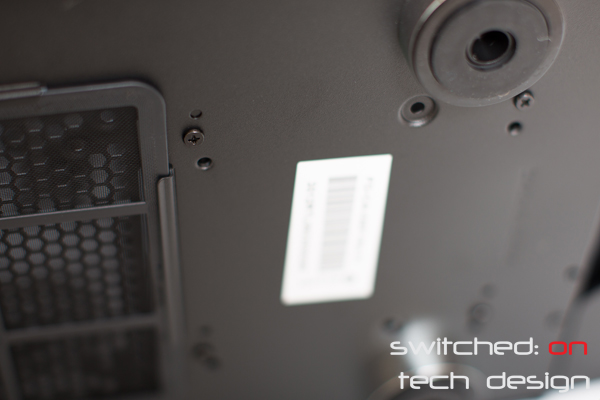

From the front we can see:

You can see the gap at the bottom (and a slight one at the sides) more clearly here. For those concerned about aesthetics, you can’t see anything when the filters are back in place:

Comparing the top to the front mounting in practice the front is notably quieter – and temperatures are a few degrees better, which may be important if you’re pushing the boundaries with the all-in-one units and don’t want to go to a full-blown watercooling setup. It’s well worth the effort to install it in the front rather than top if you don’t need the 3.5″ bays!