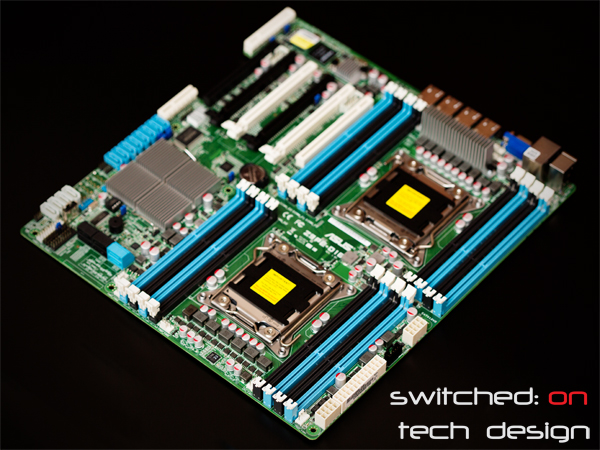

Here is the follow-up to our initial review of the Asus Z9PE-D16 motherboard!

First up – the interesting point we mentioned at the end of the previous review. One thing that the specifications sheet doesn’t explicitly state is that the ONLY things that are connected to CPU2 are three of the PCI-Express lanes and the RAM slots for that CPU. You get a fully-featured board minus those three slots and eight DIMM slots with only one CPU attached. That makes this board (at ~$600AU) incredibly attractive from the perspective of a single-CPU storage chassis, as you get a multitude of SATA ports (10) with the optional 8 SAS ports (up to 18), quad-gigabit connectivity (the i350-T4 is $350 as a plug-in card), remote control via ASWM and three PCI-E 3.0 slots for additional expansion. What’s not to love?

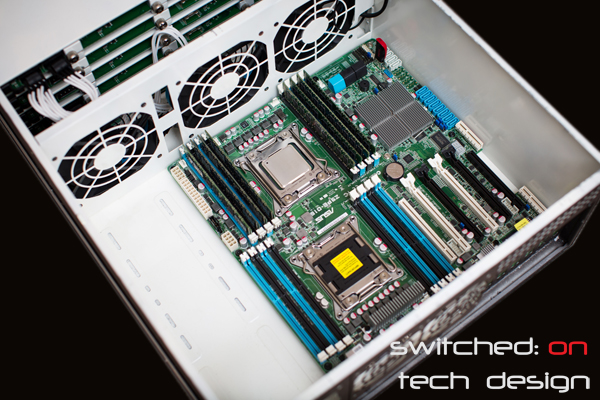

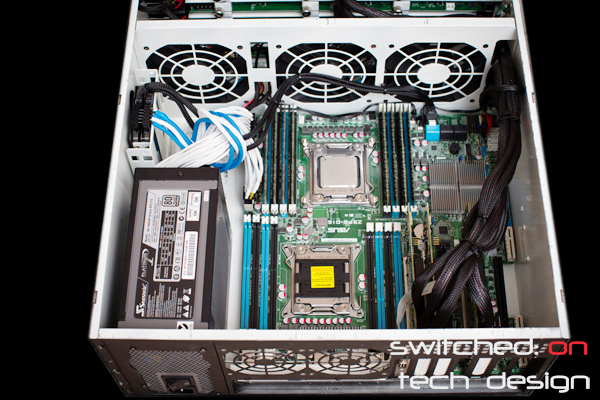

With this in mind, we built an example system using just one CPU in a Norco 4224 storage chassis.

The motherboard fits neatly without hassle – the far edge all but touching the fan wall, meaning that all-in-one liquid cooling solutions like the H100 won’t fit as the radiator/fans will obstruct the RAM slots. Now, since this is a storage-oriented setup we’re including three IBM M1015 cards in IT mode. As you can see the PCI-E slots from CPU1 are reasonably well spaced:

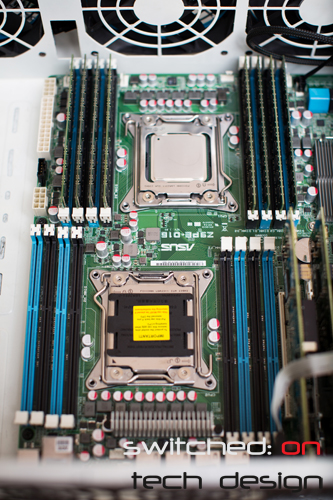

The cable looping over the cards is from the bottom serial header and leads to a serial port adapter in the topmost expansion slot. If you fill all of the PCI-E slots you’ll still have that top slot free for USB/serial/etc. header use. If you are using a single CPU it must be in the CPU1 socket, which is closest to the fan-wall in our setup:

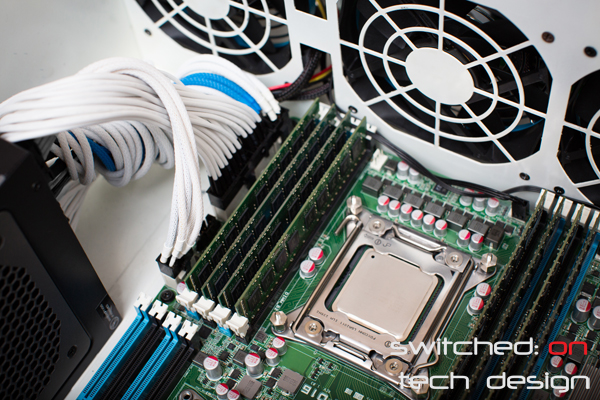

The sockets aren’t staggered in a significant way so if/when you install a second CPU you will likely see it showing higher temperatures than the first CPU, as it will be drawing CPU1’s hot exhaust air in as it’s intake air. With the current generation of non-overclockable Xeons that’s not likely to be a significant issue, however. Next we connect the 24-pin and two 8-pin CPU power connectors:

One thing to note in that photo is the proximity of the fan headers to the fan wall; they are easily accessible and it is quite convenient that they are so close as it makes cable management (and thus superior airflow) a breeze. Behind the power cables we add a 2.5″ HDD bracket for a pair of SSDs:

The SATA ports on the motherboard point directly into the fan wall, which makes getting the cables in and out once the board is in slightly tricky. Otherwise, the bracket attaches neatly to the wall with minimal impact on airflow. Now we add in the SAS cables and the build is complete bar the CPU cooler:

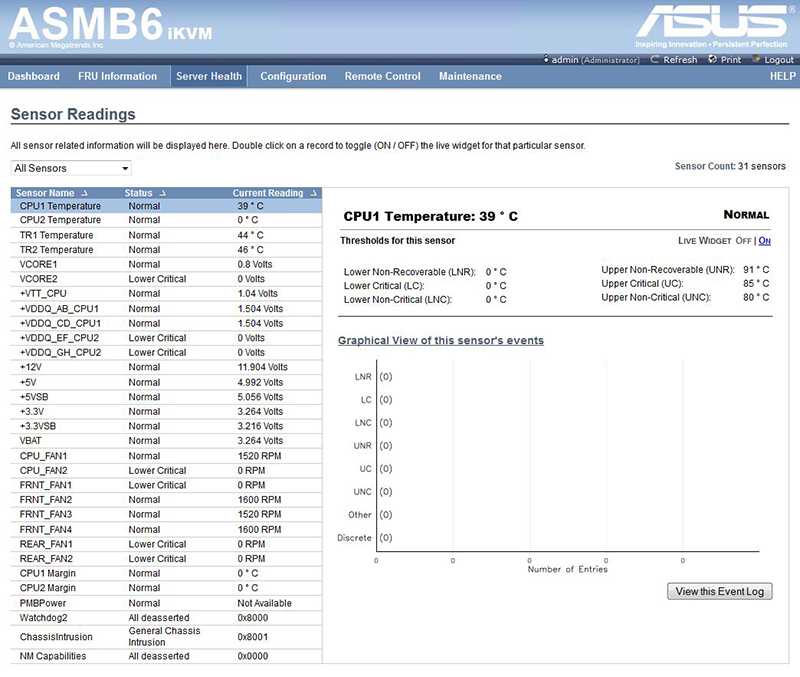

Voila. The board booted first time and updating the BIOS was easy and the BIOS is both responsive and easy to navigate, giving you access to anything I could think of needing. Setting up the ASMB6 KVM was also simple – boot to the provided CD, input the desired static IP/netmask/admin password and then log in via a browser. It took >30 seconds for the little KVM chip to process each line of input, so if you’re doing it and wondering whether it’s working just give it time – it’ll get there eventually. The ASMB6 KVM sensors readout looks like this:

The remote control feature works extremely well and shows you exactly what you would see if you had a screen plugged in. It is also usable via wifi – Wireless N, at least, we didn’t test anything slower. As you can see from the above screenshot you get as many sensors as you’re likely to need! Sharp-eyed readers will have noticed a USB stick in the motherboard in the above photos; we installed ESXi 5.0 update 1 to this without hassle, then passing through the M1015s to a storage VM. Success! It was an extremely easy motherboard to work with.

Our concluding feeling is that this is an extremely feature-rich motherboard at a very good price – the integrated quad gigabit ports, onboard KVM, full complement of x16 PCI-E slots, optional additional PIKE controller and full complement of RAM slots make for a potent platform to base your server around. One feature worth considering is the x16 PCI-E slots; many other server boards give you x8 slots, and while that may be fine for most HBA/raid cards/NIC cards you will run into trouble should you ever want to experiment with passing through video cards to VMs or using a more expensive, x16 RAID card. We always feel that keeping your options open is a good idea and given how popular virtualization is becoming you never know what you might be trying 12 months from now.

The PIKE card is a bit of a mixed bag; at around $200 you have a card which can be flashed to IR or IT mode in the same way that a M1015 card can, the catch being that it’s only connected by a x4 electrical connection as opposed to the x8 that a M1015 nominally uses. If you connect spinning disks to the PIKE connector you will likely never notice the difference, even using 7200RPM drives; for a number of SSDs, though, it may prove to be a problem. If you are using largely spinning disks, though, it could very well free up a PCI-E slot that’s otherwise occupied by a HBA/RAID card.

The final word? Highly recommended. We were so impressed that we’re now using this board as a staple in our server builds, so don’t be surprised to see it in your next Switched On Tech Design storage chassis!

You can buy the Asus Z9PE-D16 server board from Amazon.com:

Comments

One response to “Asus Z9PE-D16 Review: Part Two”

[…] Click here for Part Two! […]